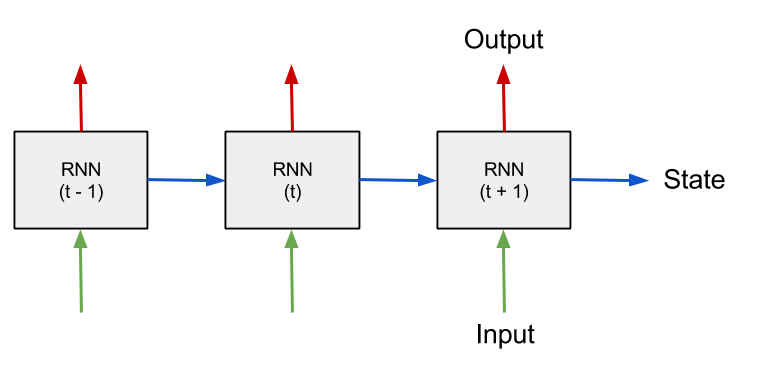

RNN or from front to back time series reading

The structure of RNN shows that the previous node connects to the current node. This connection is drawn in 2-dimension such that the previous node sits in the left side and the current node sits in the right side.

However, when you see the input tensor, the structure of it is 3-dimension such that the previous input sits in the front and the current input sits in the back.

However, when you see the input tensor, the structure of it is 3-dimension such that the previous input sits in the front and the current input sits in the back.

In [1]: tensor Out[1]: array([[[3, 7], [7, 0], [2, 0], [3, 9], [1, 2]], [[4, 4], [6, 0], [2, 4], [3, 4], [3, 0]]]) In [2]: tensor.shape Out[2]: (2, 5, 2)

So if you revisit the RNN structure as the same as that of the tensor (3-dimension), you can draw the previous node in the front, whereas the current one in the back. This gives me the new insight that when you read a sentence you can imagine that the words stream in aligning with the front-back line, which is different from streaming in aligning with the left-right line as shown in your text. This insight may improve one's reading ability. For human's brain, the movement from left to right (or from right to left as well as from up to down) is not natural as human walks from back to front. Having this insight, I tried to read the novel and checked how my reading fluency improved.

By imagining the words stream from back to front, the kind of obsession that you have to move your eye focus from left to right has been alleviated. This alleviation makes me concentrate on just grasping the meaning of the sentence. I can name this reading method as "RNN reading". I want to know how a person with dyslexia feels when he/she tries reading using this method.